All You Need is SQS, Not Kinesis: A Comprehensive Performance and Usability Analysis

📦

September 21, 2024

All You Need is SQS, Not Kinesis: A Comprehensive Performance and Usability Analysis

Introduction

In my previous article, I delved into the complexities of Amazon Kinesis and the challenges it presents to developers. Today, we're taking a deeper dive into the world of message queues and stream processing by comparing Amazon Simple Queue Service (SQS) with Amazon Kinesis. The goal of this article is to provide a comprehensive analysis that will help you make an informed decision about which service best suits your needs.

Understanding SQS and Kinesis: A Brief Overview

Before we jump into the performance comparison, let's briefly review what SQS and Kinesis are, and their primary use cases.

Amazon SQS

Amazon SQS is a fully managed message queuing service that enables you to decouple and scale microservices, distributed systems, and serverless applications. It offers two types of message queues:

- Standard queues: Offer maximum throughput, best-effort ordering, and at-least-once delivery.

- FIFO queues: Provide exactly-once processing and strict ordering.

SQS is ideal for workloads where you need reliable message delivery without the complexity of managing a message broker.

Amazon Kinesis

Amazon Kinesis, on the other hand, is a platform for streaming data on AWS, offering powerful services to load and analyze streaming data. It consists of four main services:

- Kinesis Data Streams: For collecting and processing large streams of data records in real-time.

- Kinesis Data Firehose: To load streaming data into data stores and analytics tools.

- Kinesis Data Analytics: For processing and analyzing streaming data using SQL or Java.

- Kinesis Video Streams: For streaming video from connected devices to AWS for analytics and machine learning.

In our comparison, we'll focus primarily on Kinesis Data Streams, as it's the most comparable to SQS in terms of message handling.

Methodology for Comparing SQS and Kinesis

To provide a fair and comprehensive comparison, I've set up a testing environment using AWS CDK. This approach ensures reproducibility and allows for easy modifications to the test parameters.

Setting Up the Test Environment

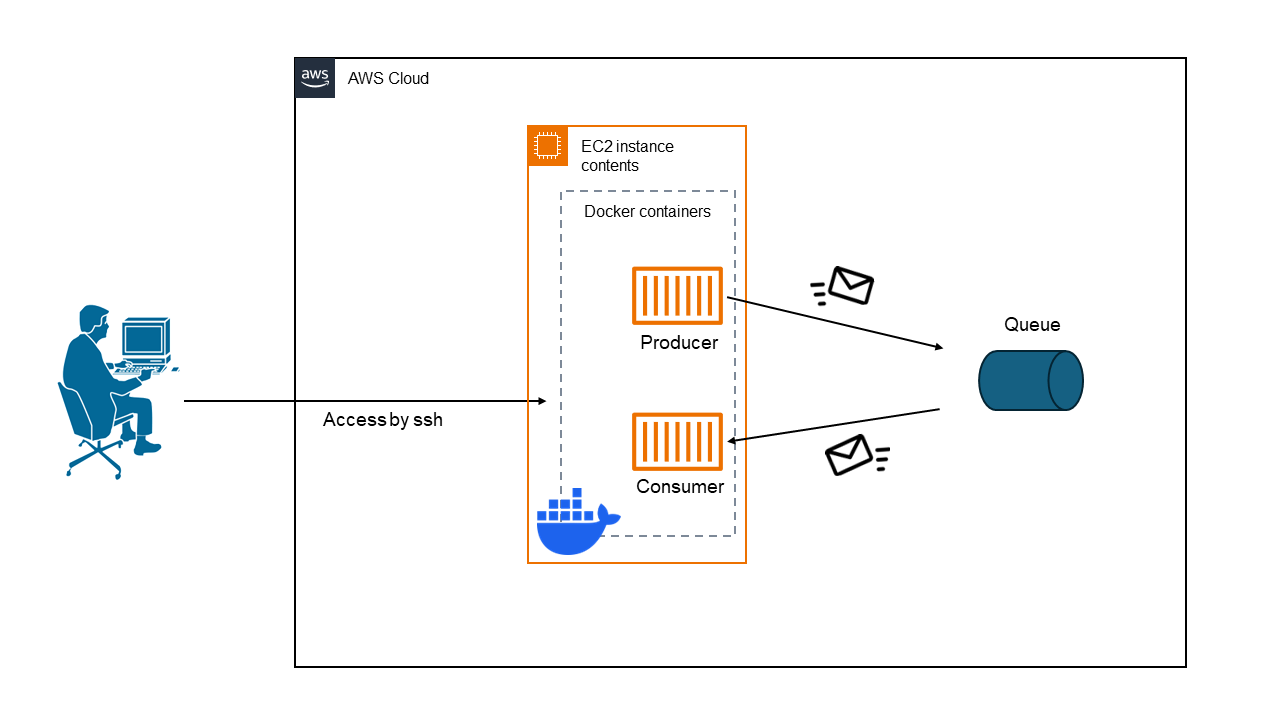

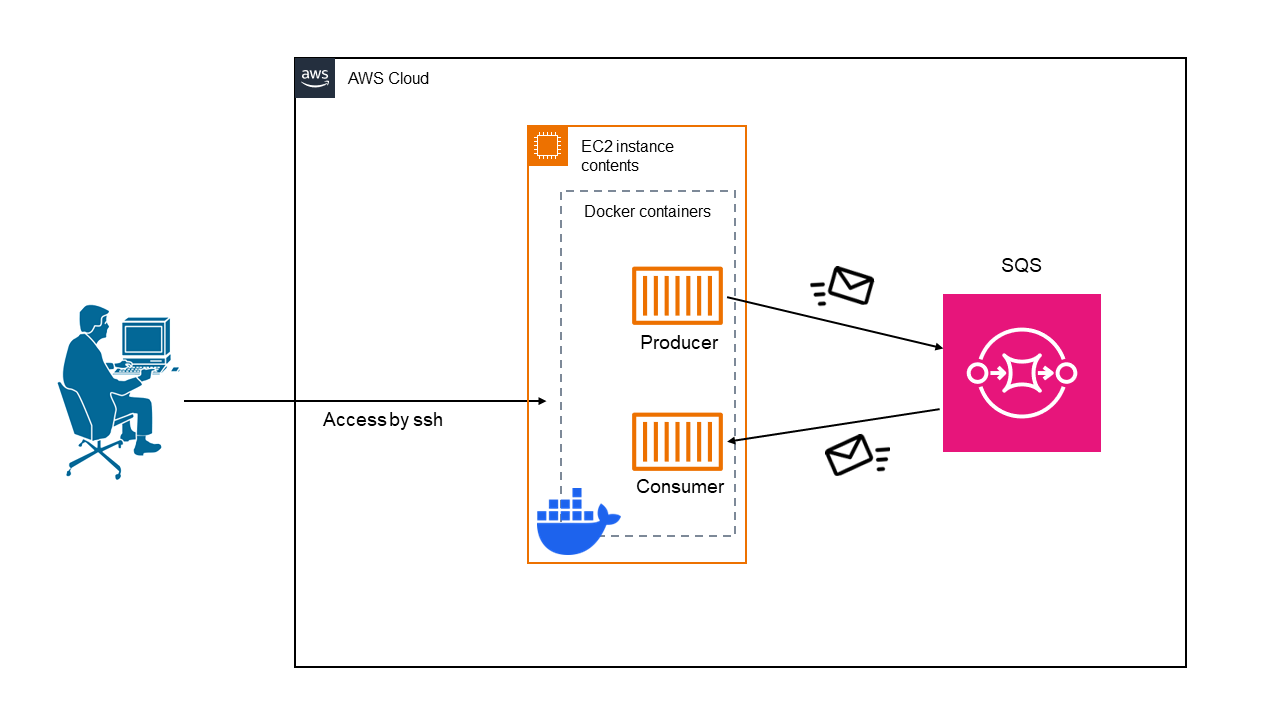

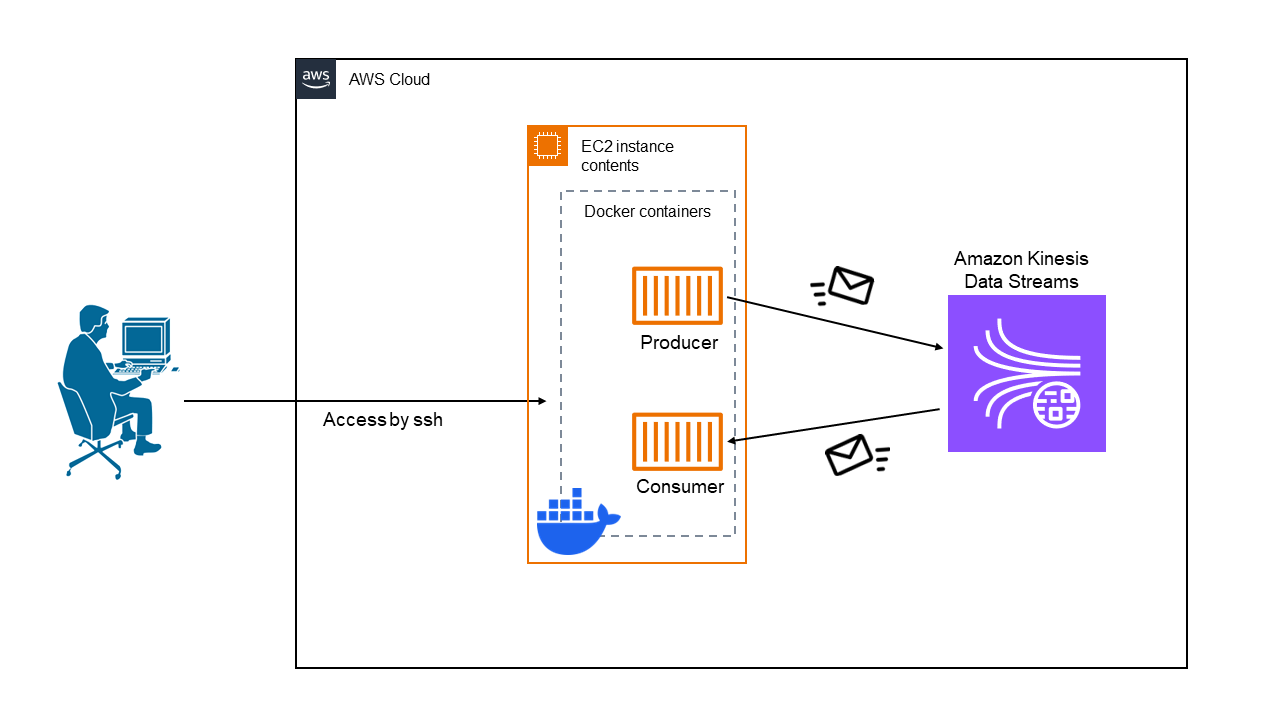

The core of our test environment consists of:

- An EC2 instance running Docker containers

- Containers for data generation, pushing to SQS/Kinesis, and fetching from SQS/Kinesis

- AWS SDK for Go (v2) for interacting with AWS services

The complete CDK stack is available in this repository:

To get started with the test environment:

- Clone the repository

- Deploy the CDK stack to your AWS account

- SSH into the EC2 instance

- Run

docker compose upto start the test containers

Performance Measurement Approach

Our performance testing methodology involves the following steps:

- Data Generation: We create messages with timestamps indicating when they were generated.

- Data Pushing: We push these messages to both SQS and Kinesis at varying frequencies.

- Data Retrieval: We fetch the messages from SQS and Kinesis, measuring the time difference between when the message was created and when it was retrieved.

- Data Analysis: We analyze the collected timing data to compare the performance of SQS and Kinesis under different conditions.

Key points to note:

- Time measurements are in nanoseconds for high precision.

- We focus on data points 4001 to 5000 to account for any warm-up effects.

- We test with different message pushing frequencies to simulate various load scenarios.

Amazon SQS: Performance Analysis

Let's start by examining the performance characteristics of Amazon SQS.

SQS Architecture in Our Test Setup

In our setup, we have a producer container pushing messages to an SQS queue, and a consumer container retrieving these messages. This mimics a typical microservices architecture where services communicate asynchronously via message queues.

SQS Performance Verification

To understand SQS performance, we'll look at three scenarios with different message production rates.

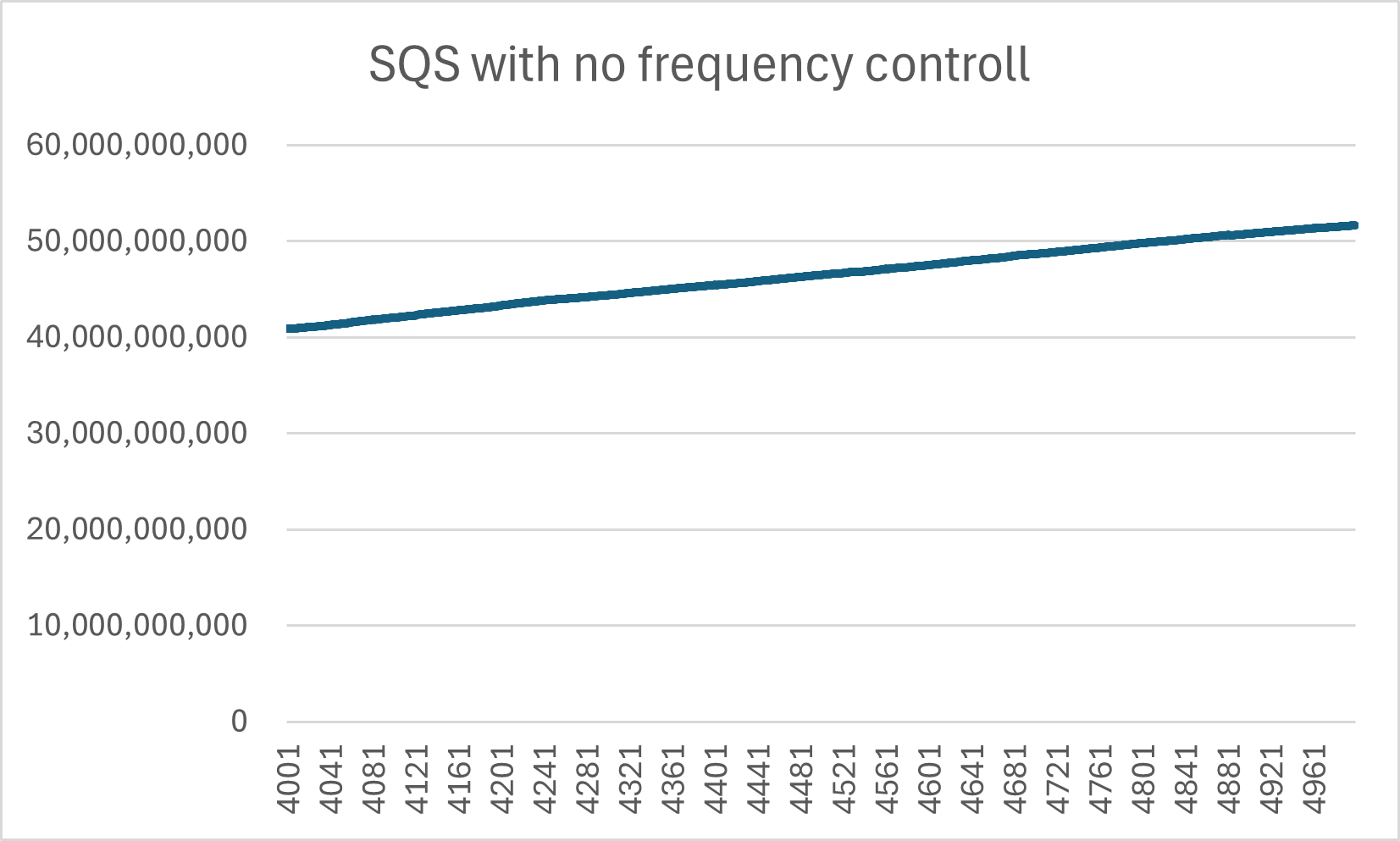

Scenario 1: No Frequency Control

First, let's examine what happens when we push messages to SQS as fast as possible, without any artificial delay:

These results reveal an interesting characteristic of SQS: there's a significant delay between message production and consumption. This is primarily due to SQS's polling nature - consumers must repeatedly ask SQS if there are any messages available.

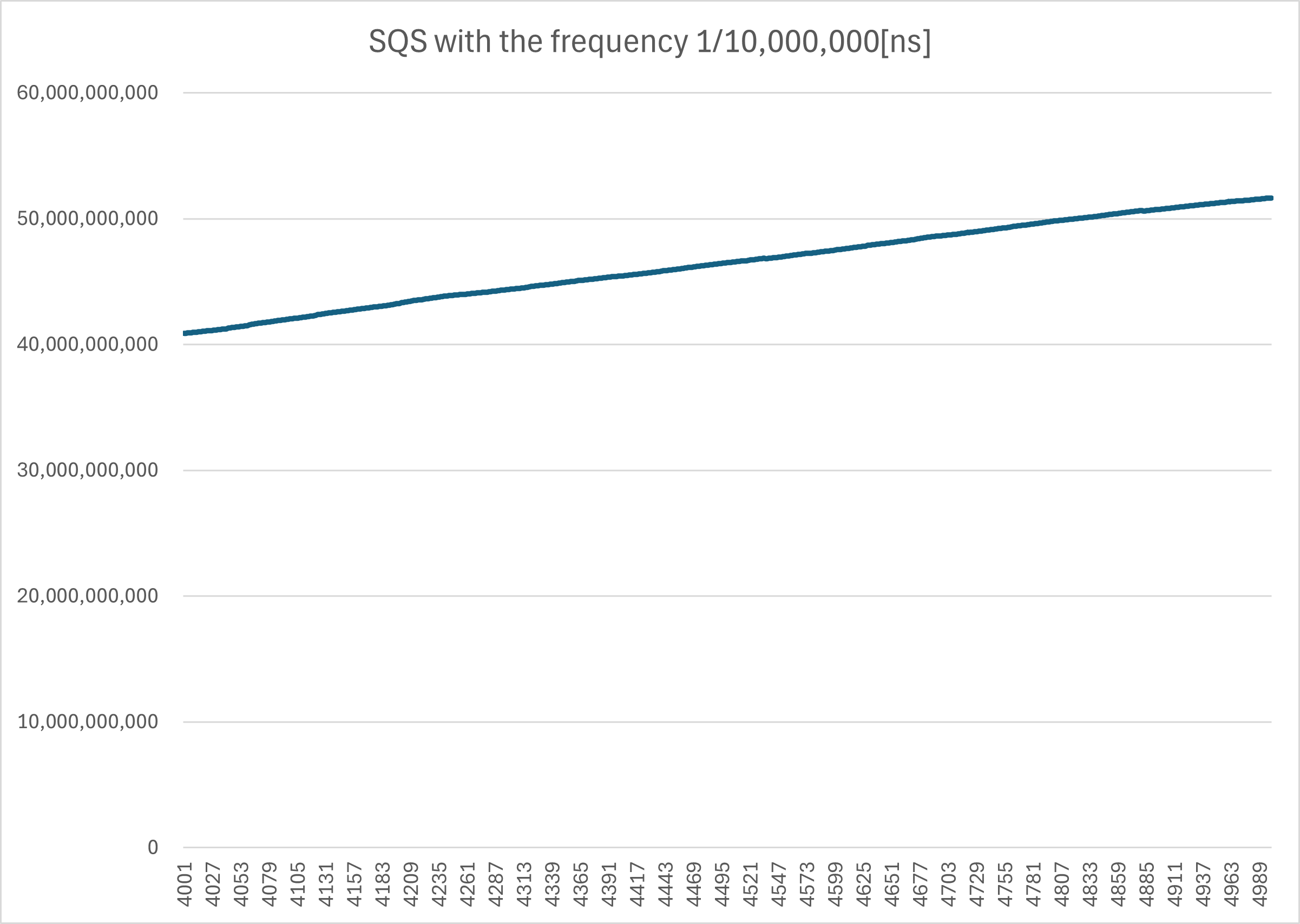

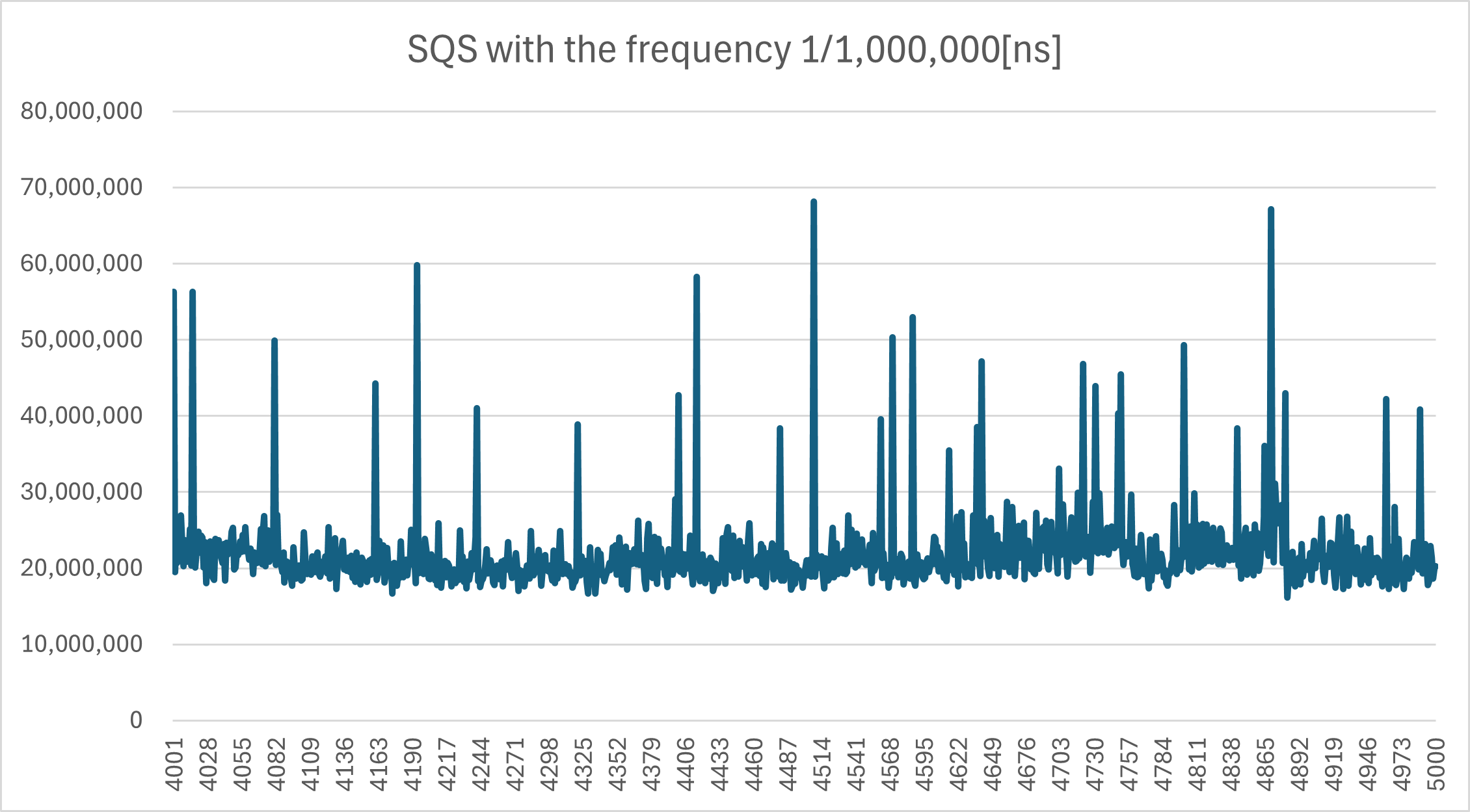

Scenario 2: Moderate Frequency Control

Next, we introduced a delay of 1/10,000,000 seconds (100,000 nanoseconds) between each message:

With this moderate delay, we see a more consistent pattern in message retrieval times. The overall throughput is lower, but the system behaves more predictably.

Scenario 3: Low Frequency Control

Finally, we increased the delay to 1/100,000,000 seconds (10,000 nanoseconds):

At this lower frequency, SQS performs very consistently, with minimal variation in message retrieval times.

Key Takeaways from SQS Performance

- SQS shows higher latency at very high message production rates.

- Performance becomes more consistent and predictable at lower message frequencies.

- SQS is well-suited for applications that don't require extremely low latency but need reliable message delivery.

Amazon Kinesis: Performance Analysis

Now, let's turn our attention to Amazon Kinesis and examine its performance characteristics.

Kinesis Architecture in Our Test Setup

Our Kinesis setup involves a producer container pushing records to a Kinesis Data Stream, and a consumer container using the Kinesis Client Library (KCL) to retrieve and process these records.

Kinesis Performance Verification

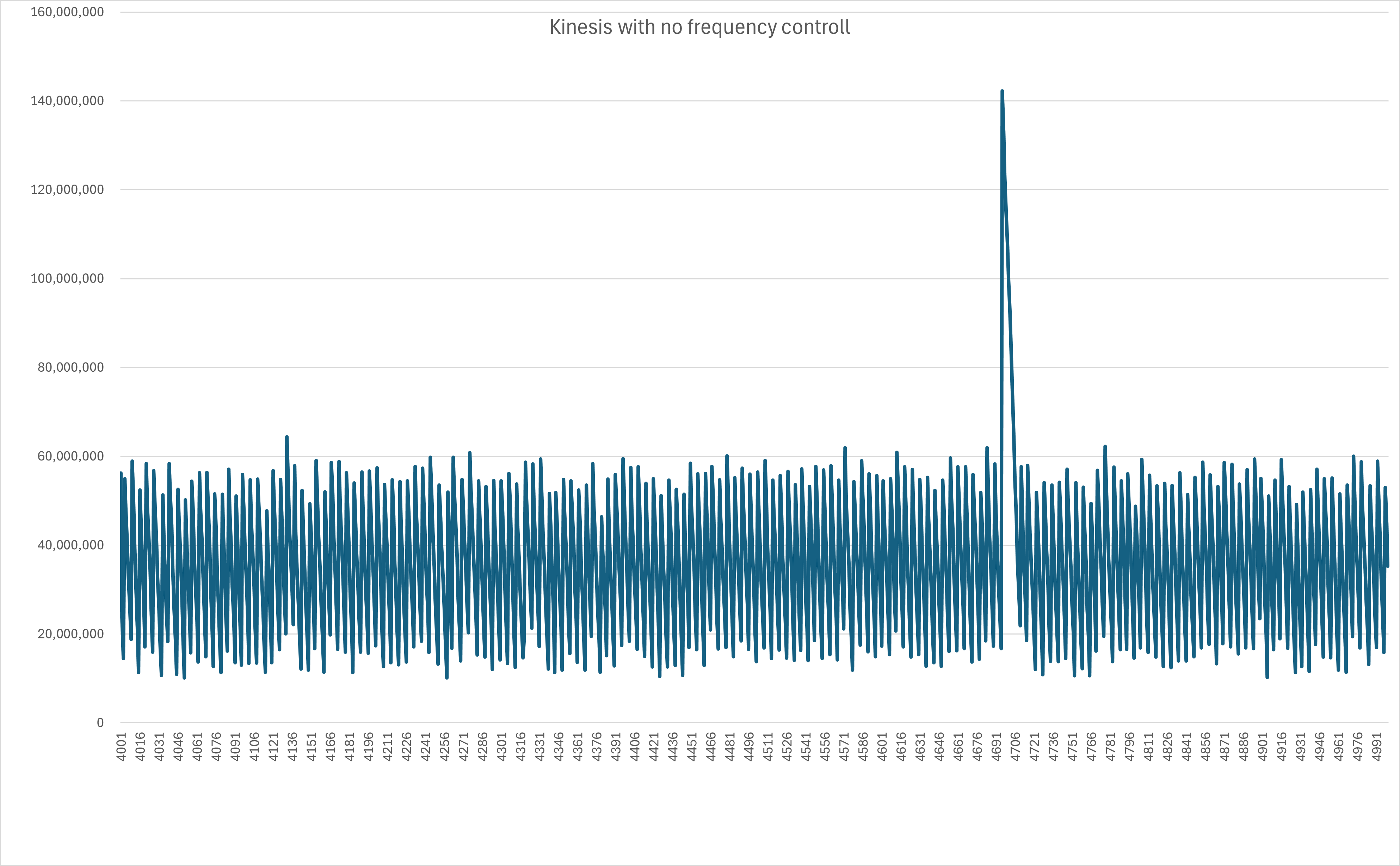

For Kinesis, we'll focus on its performance without any artificial delays in message production, as Kinesis is designed to handle high-throughput scenarios.

These results demonstrate Kinesis's strength in handling high-volume, real-time data streams. We observe:

- Consistently low latency between record production and consumption.

- High throughput capability, with the ability to process messages at a rate of about 1/40,000,000 seconds (25,000 messages per second).

- More predictable performance under high load compared to SQS.

Key Takeaways from Kinesis Performance

- Kinesis excels in scenarios requiring high throughput but the difference in latency cannot be confirmed.

- It provides more consistent performance under high load compared to SQS.

- Kinesis is well-suited for real-time data streaming applications, such as log and event data collection, real-time analytics, and IoT device data ingestion.

Comparative Analysis: SQS vs Kinesis

Now that we've examined the performance characteristics of both SQS and Kinesis, let's compare them across several key factors:

-

Latency:

- Under low load conditions, both SQS and Kinesis exhibit similar latency, approximately 20,000,000,000 nanoseconds (20 seconds).

- However, under high load scenarios, Kinesis significantly outperforms SQS in terms of latency. When messages are published rapidly using a simple for loop, Kinesis maintains low latency, while SQS's latency increases substantially.

-

Throughput:

- Both services can handle high throughput, but they behave differently under pressure.

- Kinesis maintains consistent performance even with continuous, rapid message publishing.

- SQS's performance becomes less predictable as message frequency increases, struggling to keep up with processing under high-load conditions.

-

Scalability:

- Both services are highly scalable, but they scale differently.

- SQS scales by adding more queues or increasing the number of consumers.

- Kinesis scales by adding shards to a stream.

-

Message Ordering:

- SQS FIFO queues provide strict ordering, but with lower throughput.

- Kinesis maintains order within each shard, making it suitable for applications that require both high throughput and message ordering.

-

Complexity:

- As discussed in my previous article, Kinesis has a steeper learning curve and more complex operational requirements.

- SQS, by comparison, is simpler to set up and manage.

-

Cost:

- SQS is generally less expensive for lower volume applications.

- Kinesis can be more cost-effective for high-volume, real-time data streaming scenarios.

-

Use Case Fit:

- SQS is ideal for decoupling application components and handling background jobs.

- Kinesis is better suited for real-time analytics, log and event data processing, and IoT data ingestion.

Conclusion: Choosing Between SQS and Kinesis

After this comprehensive analysis, we can conclude that Kinesis offers superior performance in terms of latency and consistency under high load. However, SQS is often sufficient - and sometimes preferable - for many web applications and microservices architectures, particularly those with moderate, consistent message volumes.

Here's a guideline for choosing between the two:

Choose SQS if:

- Your application doesn't require extremely low latency under high load

- You need a simple, cost-effective solution for decoupling application components

- You're working with a traditional queue-based architecture

- You want to minimize operational complexity

- Your message volumes are low to moderate and consistent

Choose Kinesis if:

- You need real-time data streaming with very low latency, especially under high load

- Your use case involves processing millions of events per second

- You require consistent performance with rapid, continuous message publishing

- You're building applications for real-time analytics, IoT data processing, or log and event data handling

Remember, as I pointed out in my previous article about Kinesis, the added complexity of Kinesis can be substantial. Unless your application specifically requires the real-time streaming capabilities and high-load performance of Kinesis, SQS often provides a more straightforward, cost-effective solution that's easier to implement and maintain.

In the end, the choice between SQS and Kinesis should be driven by your specific use case, performance requirements (especially under high load), operational capabilities, and budget constraints. By understanding the strengths and limitations of each service, you can make an informed decision that best serves your application's needs.